Target IT Solution Delivery Model

Note 1: Its authoritative source and latest version of this Strategy is on ESDC’s internal network

Note 2: This page will link to internal ESDC documents, which are unfortunately only accessible within ESDC corporate network.

Table of Content

- Introduction

- Guiding Policy

- Coherent set of actions

- Measuring the Strategy’s success

- Approach to implementation (Multi-staged)

- Appendix A - Diagnostic (challenges holding us back)

- Appendix B - Traceability Matrix

- Appendix C - References

- Appendix D - Definitions

- Appendix E - Acronym List Definition

- Appendix F - Statistics regarding Large IT-Enabled Projects

Introduction

Purpose

To provide ESDC with a path towards same day software delivery.

The strategy includes:

- A diagnostic, as part of a business case for the strategy by defining the problems holding us back

- A guiding policy, which serves to communicate a decision by the CIO and to describe the target state

- A coherent set of actions (an action plan), which serve to operationalize the guiding policy

The intent behind this strategy is to communicate a decision by the CIO (not yet approved) on a path forward (the Guiding Policy), and what investments are needed to operationalize that decision (the coherent set of actions).

Target Audience

This strategy document is targeted to stakeholders involved in determining how IT Solutions are delivered. More specifically, stakeholders involved in defining the rules for acquiring (whether building, buying, adopting, or configuring), delivering, operationalizing, and maintaining IT Solutions (see Appendix D for meaning). This includes both IITB and non-IITB stakeholders such as from CFOB, IAERMB, CDO, and SSC. The list of stakeholders is listed in Coherent set of actions and are expected to participate in the implementation of this strategy.

The Guiding Policy, once operationalized, will target stakeholders involved when IT-enabled Projects are conceptualized and created, when IT Solutions are architected, and when IT Products are developed, delivered, operationalized, and maintained. All ESDC personnel involved in IT investment decisions are expected to adhere to this Guiding Policy.

Business Case (Strategic Context)

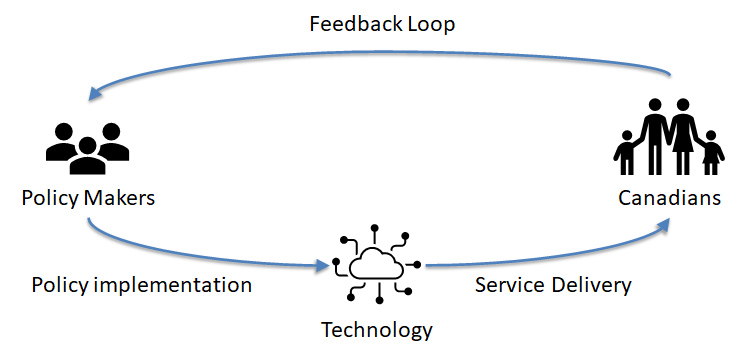

Moving to the digital age exposes the ubiquitous nature of technology in delivering services to Canadians. As such, for ESDC to iterate on its policies and service offerings1, it will need to make changes to its technologies. In order to better deliver services to Canadians, we need to improve IT’s responsiveness, otherwise the feedback loop between policy makers and Canadians will be too long, affecting ESDC’s ability to iterate on its policy making and become an agile organization.

Figure 1. In the Digital age, technology is between Policy Makers and Canadians

Figure 1. In the Digital age, technology is between Policy Makers and Canadians

ESDC requires production deployments to gain the empirical evidence needed for evidence-based decision-making.

ESDC is not a new department. It has accumulated a significant amount of technical debt2 over its decades of operation that affects the responsiveness of IT. ESDC’s application portfolio contains more than 500 applications3, 101 of which are mission critical and depend on legacy technologies (aged custom-built applications and a mainframe). In response to limitations of the antiquated IT systems, ESDC has started investing in a Business Delivery Modernization Programme4.

But using IT is a risky and costly investment5.

Most of the recommendations from external audits6 on reducing risks to technology investments seem to be about stronger, more disciplined governance. That having a more accurate prediction of the future is the key to reducing risks on technology investments.

However, to work effectively in the digital world, you must first accept complexity and uncertainty, for they demand very different approach to carrying out initiatives. A predictable world rewards advanced planning and rigid plan execution. But a complex and uncertain world rewards an empirical cycle of trying, observing, and correcting.7

The current methods of managing IT investments seek two broad goals8:

- Managing risks associated with IT investments

- Placing investments where there is benefits

This strategy seeks to improve the above two goals by proposing moving ESDC to the point where same day software deployments are possible and a common practice. The benefits to frequent small deployments, using automated methods, are expected to reduce risk9, reduce technical debt, increase client satisfaction, and increase overall confidence in the department and its staff. This is now possible with the adoption of Cloud technologies and DevOps practices.

Moving to Digital increases focus on Data as being the asset the organization cares about. It is with Data that the organization will gain insights and inform its service improvement decisions10. The ability to rapidly make software changes will require treating Data as a separate construct, not dependent on the software but rather being interfaced with it. See Appendix D (Definitions) for the relationships between IT Solution, Application, Software, and Data.

In short, this strategy seeks to change organizational conditions so that ESDC can successfully achieve its digital transformation11.

This strategy will capitalize on existing IT initiatives (such as the IITB Way Forward, the PwC Independent Study, its Cloud Operations framework, and Technical Debt remediation programme) by adding attention to them and complementing them with new activities.

This strategy’s goal is to define what the Target State of IT Solution Delivery is, and provide a roadmap in getting to this target state.

Appendix A - Business Case (Diagnostic) explains the challenges holding us from achieving same day software delivery.

Guiding Policy

The following policy reflects the decision adopted by the CIO of ESDC (approval by CIO not yet obtained) when using this Target IT Solution Delivery Model. Each policy statement is a declaration of that decision and has received the endorsement of its associated area of governance body (endorsements not yet obtained, see section coherent set of actions).

This policy becomes active when IT Solutions are to be delivered. Once active, all teams involved in the project, and the IT products involved in the IT solution, must comply with this guiding policy.

This Guiding Policy has been prepared by taking into consideration alignment and compliance with existing policy instruments and does not replace them. Stakeholders are expected to still comply with existing policy instruments including, but not limited to:

- ESDC Policy on Project and Programme Management (PPPM)

- ESDC Directive on Programme Management

- ESDC Directive on Project Management

- ESDC Procurement Policies

- ESDC Data Strategy

- TB Directive on Service and Digital

- TB Directive on Security Management

- TB Directive on Automated Decision Making

Governance, Compliance, and Reporting

- DevOps teams have the authority to deploy changes in production using trusted DevOps pipelines

- DevOps teams are defined as per the <IITB standard definition>

- DevOps pipelines meet the <minimum standard change management criteria> to be able to promote software towards to, and including to, production

- DevOps teams publish their development metrics internally to ESDC as defined by the <standard development metrics for DevOps teams>

- IT Products publish their production metrics at minimum internally to ESDC as defined by the <standard production metrics for IT Products>

- Security Assessment & Authority (SA&A) uses the <Target-SA&A methodology>

- Accessibility Assessment uses the <Target-Accessibility Assessment methodology>

- Financial Audit Assessment uses the <Target-Financial Assessment methodology>

- Automated Decision Systems Assessment uses the <Target ADS Assessment methodology>

- IT-Enabled Projects produce production-ready IT Products at minimum every 6 months

- IT-Enabled Projects start when the minimum IT-Enabled project intake conditions are met, as defined by the <IT-enabled project intake condition standard>

- IT-Enabled Projects follow the <IT-Enabled Project Agile Governance Framework>

Capacity Planning

- Capacity Planning uses DevOps teams as units (i.e., not individuals)

- IT-Enabled Projects are assigned a combination of pre-defined DevOps team(s)

Architecture

- IT Solutions are loosely coupled to Business Capabilities, as defined by the <Adopt, Build, Buy Strategy>

- Applications, making up IT Solutions, are loosely coupled with the organization’s Data

- Software components, making up Applications, are loosely coupled between them

- IT Products can test, deploy, and make technical changes without dependencies on other IT Product DevOps teams

- DevOps teams have the authority to choose their IT Products technical stacks, unless the technical stacks are in the Containment or Retirement category, as defined by the <Technology Standards Management>

- IT Products expose their functionalities and data via secure APIs

- IT-Enabled Projects affecting Legacy Applications are scoped to use the <strangler design pattern> in alignment with the <_Target Architecture Vision for Legacy Applications>

- Legacy Applications are systems in the <ESDC Legacy Applications Standard List>

Product Management

- IT Solutions and Applications are managed as Products (referred to as IT Products)

- IT Products have a single Product Owner with the necessary authority to approve changes to them

- IT Products are supported by one or more DevOps teams

- IT-Enabled Projects fill backlogs of the IT Products in scope of the project

- DevOps teams support their IT Products in production and are accountable for their uptime

- IT Products backlog items are categorized as non-discretionary or discretionary

DevOps

- DevOps teams use a pipeline to control the release process from commit to production

- The DevOps pipeline allows for manual intervention, if required

- DevOps teams are comprised of 9 multi-disciplinary members maximum

- DevOps teams use build automation

- DevOps teams use test automation for:

- Unit testing

- Integration testing

- Security test automation

- Accessibility testing

- Performance testing

- Functional testing

- Smoke testing

- DevOps teams use Continuous Integration

- DevOps teams use Application Release Automation

- DevOps teams use a git-based version control system

- DevOps teams use automated monitoring

Coherent set of actions

The following are actions that need to be performed in order to make the Target IT Solution Delivery Model operational.

| Policy Category | Action | Description | Lead | Contributor(s) |

|---|---|---|---|---|

| Governance | Review IT Project Governance decision chain |

Review terms of reference of Governance committees involved in the IT-Enabled Project PMLC and propose recommendations to allow the Target State to operate. e.g.: |

IITB Governance |

DGPOC OCMC ARC EARB (maybe) PPRC PPOC CIPSC IT Strategy CFOB IPPM |

| Adjust IITB SDLC | Adjust IITB's SDLC to fit within the new IT-Enabled Agile Governance Framework | BPMO | Senior Advisors | |

| Produce IT-enabled project intake condition standard |

Produce a list of conditions that IT-Enabled projects must meet before project intake can accept them. e.g. |

ITSM |

BPMO BRM Enterprise Architecture Business Architecture CFOB IPPM CDO |

|

| Produce IT-Enabled Project Agile Governance Framework |

Produce a standard framework for governing IT-Enabled Projects under the Target IT Solution Delivery Model. A standard framework is needed to reduce confusion, ensure coherence between teams, and ensure governance remains lightweight in order to provide space for agility. Development Value Streams are expected to be defined within this framework as are KPIs to report on.

Similarly to draft BDM Digital Experience and Client Data (DECD) Agile Governance available here. |

BPMO |

Senior Advisors Enterprise Architecture BRM IITB Governance CFOB IPPM |

|

| Produce a Policy guidance document | Produce a guidance document as a supporting material to the Target IT Solution Delivery Model guiding policy to demonstrate what it looks like, and how teams being part in it can ensure their compliance to it | IT Strategy |

BPMO CCoE Senior Advisors BRM CFOB IPPM |

|

| Produce standard development metrics for DevOps teams |

Produce a minimum list of development metrics that DevOps teams must publish on. The purpose of these metrics is to provide insights into the development maturity of teams and quality assurance levels of IT Products before they reach production. e.g.: |

TSDM Change Mgmt team |

Senior Advisors DTS Interoperability BPMO Research & Prototype CCoE |

|

| Produce standard production metrics for IT Products |

Produce a minimum list of production metrics that IT Products must publish. The purpose of these metrics is to provide insights on the health and behaviours of IT Products once in production. These insights are intended to be used for further IT Product enhancements. e.g.:

|

TSDM Change Mg team |

Senior Advisors DTS Interoperability BPMO Research & Prototype CCoE |

|

| Produce standard definition for “DevOps team” | Produce a standard definition listing the minimum requirements, roles, and responsibilities for a team to qualify as DevOps. This to reduce confusion and misuse of the term since the Guiding Policy allows more authority to such teams.

Draft copy (main document on Office 365 available to ESDC staff). |

TSDM Change Mgt team |

BPMO CCoE Senior Advisors Research & Prototype Interoperability DTS |

|

| Compliance | Produce Target SA&A Process | Produce a Target SA&A Process that favours and incentivize DevOps automation capabilities, test results, product evolution, and trusted DevOps pipeline. | IT Security |

IITB Compliance unit Senior Advisors |

| Produce Target Financial Assessment Methodology | Produce a Target Financial Assessment Methodology that favours and incentivize DevOps automation capabilities, test results, and product evolution. Audit processes in scope include internal ESDC Audit, not External auditing entities |

IAERMB |

IITB Compliance Unit Senior Advisors CFOB ICAAD |

|

| Produce Target Accessibility Assessment Methodology | Produce a Target Accessibility Assessment Methodology that favours and incentivize DevOps automation capabilities, test results, and product evolution | Accessibility |

BPMO Senior Advisors |

|

| Produce Target ADS Assessment Methodology | Produce the Target Automated Decision System Assessment Methodology to assure any software intended for automated decision-making complies with the Directive on Automated Decision-Making, including Privacy and Legal controls that can be automated. | AI CoE |

CDO Privacy Management Division Legal Services |

|

| Produce Automated Testing guidance and standards | Provide guidance to DevOps teams when using automated testing for the various types of tests in scope. Provide standards on acceptable thresholds for automating application releases between environments (up to, and including, production) | TSDM Change Mgt team |

IT Security Accessibility Testing Services Senior Advisors |

|

| Architecture | Review technical brick process | Review technical bricks management process to allow IT Products teams to adopt non-standard technical stacks in addition to recommending emerging standards. Evaluate conditions and responsibilities of stakeholders regarding non-standard technical stacks, as well as where the needs for approval are warranted. | Technical Architecture |

TSWG CCoE OCMC IT Strategy Senior Advisors EARB |

| Define API approval process | Formally define API approval process to allow DevOps teams to expose their products functionality and data via secure APIs. Provide standards that DevOps teams are expected to comply with when releasing secure APIs, such as data exchange formats, reference data model, security controls, and mandatory procedures for API Assessments (Directive on Service and Digital). | Interoperability |

Enterprise Architecture Solutions Architecture CDO |

|

| Define Target Architecture Vision for Legacy Environments | Define the target architecture vision for legacy environments to enable the transition towards BDM and the Target IT Solution Delivery Model. The Target Architecture Vision is to provide direction and set reasonable expectations for DevOps teams in a legacy environment. Its content is to provide a conceptual view of the layered architectures(presentation, integration, system/applications, information/data, networking, and security) and reference architectures that project teams can implement (see strangler patterns). | Enterprise Architecture |

Technical Debt Solution Architecture Technical Architecture BSI Benefits and Case Mgt Solutions |

|

| Produce ESDC Legacy Application Standard List | Produce an official list of applications deemed “Legacy” from the APM portfolio. This standard list is used by the 7th Architecture guiding policy statement | Enterprise Architecture | Technical Debt | |

| Produce Loose Coupling architecture guidance |

Produce a guidance document to clarify and guide Architects and DevOps teams in building IT Solutions making use of loose coupling architecture principles that provide DevOps teams more autonomy. Two areas of loose coupling is meant to be addressed: 1. Between software components making up an Application (e.g. adopting Micro-Services, 12factor.net app principles 2. Between the Application and the organization’s Data (e.g. adopting Database change management practices for DevOps, providing Data Access Layers to DevOps teams) The guidance document is expected to include architecture and design patterns, principles, and sources of re-usable code snippets while maintaining adherence to data management expectations.. |

Solution Architecture |

DAS CDO Senior Advisors Interoperability |

|

| Product Management | Produce a team lending model | Engage with the Resource Centre to provide an alternative means to deploy resources to projects, one that favours dedicated multidisciplinary small teams over individuals, as well as producing enabling teams responsible to accelerate other teams' transition towards DevOps (see definition of “DevOps team” and the different types of teams) | Resource Centre |

Senior Advisors DTS Interoperability |

| Classify IT Products and their ownership | Classify official list of IT Product offerings and identify a single IT Product owner for each one. | TBD |

Technical Debt (APM) Enterprise Architecture |

|

| Create a matrix-based resource pool model | Create a model where specialized resources, Champions or SMEs, can be deployed in DevOps teams | TSDM Change Mgt team |

Resource Centre IT Security Accessibility Testing Services CCoE |

|

| DevOps | Get endorsement from SSC | Engage with SSC to get their endorsement in allowing access to deploy directly in production (on premise deployment model) | TSDM Change Mgt team |

IT Security SSC |

| Produce standard change management criteria |

Produce a standard change management criteria that all Pipelines must comply with, at minimum, before they can automatically promote code in the pre-production and production environments. e.g. |

TSDM Change Mgt team |

IT Security Accessibility Testing Services AI CoE IITB Compliance Unit |

|

| Produce DevOps ConOps guidelines | Produce a DevOps ConOps guidelines, including release processes and standards, on releasing code from commit to production (e.g., pre-prod environment, blue-green model) | CCoE |

IITB Senior Advisors IT Security BPMO Cloud Ops |

|

| Provide means for DevOps teams to experiment with new tools | Leverage the SAFER LAB, Virtual Desktop Image, and Technical Architecture standards to provide means for DevOps teams to install and try out new innovative tools for experimentation, with eventual rapid update in the Technical Bricks | IT Research & Prototype |

CCoE Research & Prototype IT Security IT Environment TSWG |

Measuring the Strategy’s success

This Strategy‘s success will be measured by comparing the following metrics against the conventional IT project methodology.

The metrics are defined as follows:

- Lead time for change: the time code changes take to go from check-in to release in production

- Deployment frequency: the rate at which software is deployed to production or released to end users

- Change fail: the change failure rate measured by how often deployment failures occur in production that require immediate remedy

- Time to restore: the time it takes from detecting a user impacting incident to remediating it

- Client satisfaction: the general level of contentment, by applications within the APM portfolio, that a client is satisfied with the IT product

| Metric | Collection Method | |

|---|---|---|

| Target Model | Conventional Model | |

| 1. Lead time for change |

Automatic Using DevOps team's own pipeline metrics |

Manually Using Release team's statistics per change request |

| 2. Deployment frequency |

Automatic Using the DevOps team's continuous delivery pipelines |

Manually Using Release Process team metrics |

| 3. Change failure rate |

Automatic TBD |

Manually Using the Release Process team's change debrief log |

| 4. Time to restore service |

Automatic Using the DevOps team's incident tracking tool – time incident submitted to time incident closed |

Automatic Using the Service Desk incident tracking tool – time incident submitted to time incident closed |

| 5. Client satisfaction |

Manually BRM's client survey |

Manually BRM's client survey |

Manually: the collection of data requires manual intervention (e.g., surveys using the SimpleSurvey software, interviews, emails, spreadsheet updates).

Automatic: the collection of data is performed automatically, usually involving programmatic means (e.g., events triggered by git repository when a new commit is performed and updates a master dashboard “view”).

NOTE: the four first metrics (a, b, c, and d) are the 4 key metrics as defined by the DevOps Research Assessment institute (DORA)

Approach to implementation (Multi-staged)

It is understood that the strategy’s ambition will not be implemented in a big bang approach or in a couple of years. The approach to its implementation will be that of an iterative one towards the target state using three stages:

- Stage 1: Foundation and awareness

- Expose existing ESDC IT teams already working close to or at the Target State by promoting their visibility in the organization and granting them the authority to operate under the Target State (e.g., teams in the CPP-E project).

- Sanction 1 IT Product to operate under the new Target State model

- Sanction 1 IT-Enabled Project to operate under the new Target State model

- Build a temporary, funded, DevOps Community of Excellence (3-year life) that will act as change agents and coaches for existing ESDC IT teams towards their DevOps adoption

- Identify “Champions” by functions. These champions provide coaching and mentoring to other teams (i.e., the start of “enabling teams” that the Team Topology12 concept refers to)

- Raise Awareness on the need for System Administrators to move towards becoming Site Reliability Engineers

- Stage 2: Infrastructure and Legacy readiness

- Identify 2 IT-enabled projects touching legacy systems for piloting and scope them towards the Target State direction (as per the Target Architecture Vision for Legacy)

- Provide common DevOps pipelines for IT teams to use (funded, passed SA&A, and adopted as Technical Standards)

- Experiment with non-production environments, for legacy systems, in the public cloud (e.g., testing environments)

- Stage 3: Scale

- Identify “Platform teams” that provide services to DevOps teams, through tooling and APIs

- Identify “Complicated subsystem teams” that provide specialized expertise to DevOps teams (e.g., mainframes, complex mathematics, analytics)

- Produce IITB Organizational Structure (multi-functional teams oriented), and its associated Financial Model

- Stage 4: Normalize and manage Legacy

- Mandate all greenfield IT-Enabled Projects (not touching legacy infrastructures) to operate under the Target State Model

- Mandate all brownfield IT-Enabled Projects (touching legacy infrastructures) to scope them towards the Target State direction (as per the Target Architecture Vision for Legacy)

Appendix A - Diagnostic (challenges holding us back)

The Policy on Service and Digital, reflecting citizens’ expectations, requires ESDC to review services delivered to clients periodically. With the ubiquitous nature of technology, any changes to ESDC services will require implicating IT teams.

The Directive on Service and Digital highlights how departmental CIOs (and CDOs) have a major say on how departments are to digital transform .

| Official | % of Total Reqs | Mandatory Procedures (4) reqs13 |

|---|---|---|

| CIO (with CDO) | 84% | 229 |

| Service | 10% | 0 |

| Cyber Security | 6% | 74 |

The current methods of managing IT investments is guided by the following ESDC financial policy instrument: Policy on Project and Programme Management (PPPM). This is the Policy that produced the Standard on Project Management which describes the key requirements for ESDC personnel operating in a project environment. The current standard seek to manage risks and costs with advanced planning and rigid plan execution. The gating approach to project management requires project teams to seek permission to continue. This permission is granted when sufficient evidence of due diligence and an accurate picture of the future is provided. This may work when the future is predictable, but not when it is complex and uncertain. Its adverse effect is promoting feature bloating, as the burden of going through the process of seeking permission before proceeding makes it a deterrent to deploy small changes.

Moving to a highly integrated, complex, and uncertain world that is Digital warrants us to move towards a more empirical cycle of trying, observing, and course correcting. The relationship between the Planning and Execution Phase becomes cyclical. It’s through execution that we gain the necessary empirical evidence to inform our Planning estimates and decide on course corrections. Recent software development practices, mainly Cloud and DevOps, have permitted us to move towards this approach. IT no longer requires lengthy wait time to procure servers, to develop large code bases, and to seek large capital investments for infrastructure. Cloud has commoditized IT infrastructure, enabling ESDC to rapidly develop, test, and deploy software.

In addition, the GC is moving away from Monolith-types of solutions (large code base that provides many capabilities but become bottlenecks and single points of failures in the IT ecosystem, as the many IT teams needed to perform work collide)14.

The TB Directive on the Management of Projects and Programmes makes room for the above approach:

- [The Project Sponsor is responsible for:] 4.2.5 Maintaining effective relationships with key external stakeholders including implicated departments and common service providers

- [The Project Sponsor is responsible for:] 4.2.8 Applying as appropriate, incremental, iterative, agile, and user-centric principles and methods to the planning, definition, and implementation of the project

- [The Project Sponsor is responsible for:] 4.2.18 Establishing a project gating plan at the outset of the project, consistent with the department’s framework, that [4.2.18.1] Documents the decisions that will be taken at each gate, the evidence and information required in support of the gate decisions, the criteria used to assess the evidence, and the gate governance

The above three requirements from the TB Directive indicate that departments must accept and adapt to change, as well as make evidence-based decisions (like planning decisions). Such evidence can only be obtained by execution.

IITB has made efforts to modernize its management of technology, as is showcased in the IITB News Kudo’s Corner15 and its IITB Way Forward16 plan. However, ESDC’s relationship with technology spans beyond IITB’s influence.

Moving ESDC towards being an agile organization requires moving towards a model that enables smaller, more frequent software deployments as they are enabling the organization to gain empirical evidence necessary to make evidence-based decisions.

Appendix B - Traceability Matrix

The following traceability matrix is used to show alignment with various strategies, plans, and policy instruments already in progress.

| Strategy element | Aligns with |

|---|---|

| Governance and Compliance |

GC Digital Standards/design with users GC Digital Standards/iterate and improve frequently GC Architecture Standards/Business Architecture GC Architecture Standards/Security Architecture & Privacy GC DOSP/Chapter 1 user-centred GC DOSP/Chapter 6 digital governance TB Policy on Service and Digital/4.2 client-centric service TB Directive on Service and Digital/Strategic IT Management TB Directive on the Management of Projects and Programs/4.2.6, 4.2.8, 4.2.18 IITB Way Forward/1. Adjust IITB leadership IITB Way Forward/6a. Strengthen IM/IT Strategy IITB Way Forward/6f. formalize requirements mgt framework IT Plan/Section II/Foundational PwC Independent Study/Recommendation 2.2 PwC Independent Study/Recommendation 2.4 |

| Capacity Planning |

TB Policy on Service and Digital/Supporting workforce capacity and capability PwC Independent Study/Recommendation 2.2 |

| Architecture |

GC Digital Standards/iterate and improve frequently GC Architecture Standards/Information Architecture GC Architecture Standards/Application Architecture GC Architecture Standards/Security Architecture & Privacy GC DOSP/Chapter 3.2 Any-platform, any-device GC DOSP/Chapter 4.3 Procurement modernization GC DOSP/Chapter 4.4 IT Modernization IITB Way Forward/6a. Strengthen Enterprise Architecture practice IITB Way Forward/6e. Enhance app development PwC Independent Study/Recommendation 2.4 ESDC Data Strategy/Access ESDC Data Strategy/Data Management |

| Product Management |

GC Digital Standards/iterate and improve frequently GC Digital Standards/address security and privacy risks GC Digital Standards/build accessibility from the start GC Architecture Standards/Business Architecture GC Architecture Standards/Security Architecture & Privacy GC DOSP/Chapter 2.3 Accessibility and inclusion TB Policy on Service and Digital/Supporting workforce capacity and capability IITB Way Forward/6d. Separate programme/project IITB Way Forward/6e. Enhance app development PwC Independent Study/3.1 |

| DevOps |

GC Digital Standards/address security and privacy risks GC Architecture Standards/Technology Architecture GC DOSP/Appendix A item #34 GC DOSP/Appendix A item #37 GC DOSP/Appendix A. item #69 IITB Way Forward/6e. Enhance app development IITB Way Forward/6g. Modernize IM/IT testing regime IT Plan/Section II/Foundational PwC Independent Study/2.2 PwC Independent Study/3.2 |

Appendix C - References

- ESDC Policy on Program and Project Management (PPPM)

- ESDC Directive on Project Management

- ESDC Directive on Benefits Management

- ESDC Project Management Life Cycle

- ESDC Data Strategy

- IITB Governance Structure

- IT Security SA&A Process

- SDLC Process (current and proposed alternate by Senior Advisors+BPMO)

- Team Compositions being investigated by Senior Advisors

- ESDC Procurement Policies

- TB Directive on Service and Digital

- TB Directive on Security Management

- TB Directive on the Management of Projects and Programmes

- TB Directive on Automated Decision-Making

- Scale Agile Framework (SAFe)

- Disciplined Agile (DA)

- Large-Scale Scrum

- Good Strategy, Bad Strategy (Book)

- How to write rules that people want to follow

Appendix D - Definitions

A cross functional, multidisciplinary team that emphasize the collaboration and communication of both software developers and information technology (IT) professionals while automating the process of software delivery and infrastructure changes. A DevOps team complies with the following standard IITB definition.

A copy of the Office 365 version is available here.

Decentralized Version Control System (DVCS)

Centralized version control systems are based on the idea that there is a single “central” copy of a software project somewhere (most likely on a server), and developers make code changes directly on this central copy.

A decentralized version control system (DVCS) do not necessarily rely on a central server to store all the versions of a software project’s files. Instead, every developer “clones” a copy of a repository and has the full history of the project on their own hard drive. This copy (or “clone”) has all of the metadata of the original. In a DVCS, developers typically will make code changes on their local copy, test them on their local copy, and “push” them to a central server containing the “master” copy the software project is intended to use.

The three most popular DVCS are Mercurial, Git, and Bazaar.

ESDC will be using Git to favour interoperability between source code repositories and accept that the market has moved towards git-based source code repositories.

Development Value Stream

The series of steps and people who develop that ESDC uses to implement IT Solutions enabling the enterprise to carry out its business operations. The people a development value stream uses are a set of DevOps teams.

Organizing portfolios around development value streams enables visualizing the flow of work to produce solution, reduces handoffs and delays, allows faster learning and shorter time to market, supports leaner development and budgeting methods.

IT Solution, Application, Software, and Data

A standard definition is expected to be produced by EARB (see the Adopt, Build, Buy strategy’s coherent set of actions). Until this is complete, the following definition and relationships are being used.

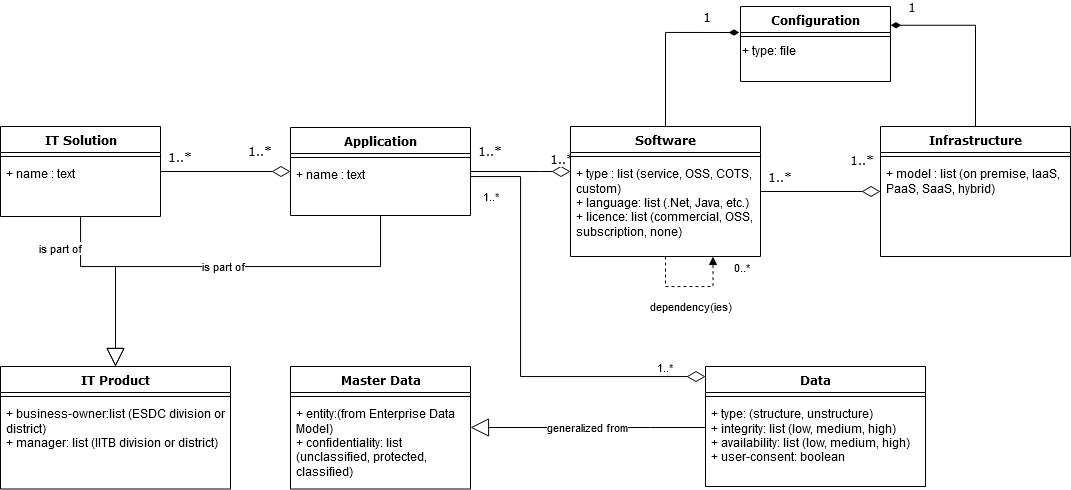

Figure 2 . Relationship Model between the different software elements

Figure 2 . Relationship Model between the different software elements

And IT Solution is made up of one or more Application(s). It is essentially a grouping of Applications.

An Application is part of the Application Portfolio Management Program (APM) and is made up of one or more software (more commonly referred to as software components). An Application also makes use of, or produces, Data by interfacing it with its software components. In an Application, software and data are separate constructs.

A software component is a single code base: a grouping of files (binary, or text that will be compiled into binaries) that will execute on an infrastructure. A software component may have dependencies on other software components. This is often the case with its libraries, other software components, that are built by other teams, adopted from Open Source Software licences, or purchased from a 3rd party vendor. It is expected that software will have at least one configuration setting (e.g. a file) specific to the environment it is deployed to.

The Infrastructure is where the software is deployed on. Traditionally, this would be a Server running an Operating System. In the public cloud, this can be containers or other Platforms as a Service. As per software, Infrastructures also make use of configuration settings.

The Data that an Application interfaces with, is part of the Master Data Management. It refers to the source of truth(s) for a particular Data Entity, has its confidentiality, integrity, and availability defined for this business context, and the end-user consent is made known to the Application.

IT Product

An IT Product consists of either an IT Solution or an Application. The decision to scope a Product towards an IT Solution or an Application depends on the organization’s particular business context.

Moving towards Product Management, the idea is that IT Solutions or Applications are designed to grow and change over time, unlike projects that have a single beginning, middle, and end. This communicates that IT Solutions and Applications will use different methods of investment management to continually improve.

The “IT Product” is the technical portion of a greater “Product” offering: the bundling of services offered to clients17.

IT-Enabled Project

A temporary endeavour undertaken to create a unique technology product, service, or result. The temporary nature of IT-Enabled projects indicates that they have a definite beginning and end.

This definition is a modified version for the ESDC’s Directive on Project Management to include the IT aspect.

IT Product Owner

A person who represents the business or user community and is responsible for working with that community to determine what features will be in the product release

IT Product teams

Comprises of a set of DevOps teams that are needed to operate and maintain the suite of software the given IT Product is made of. Managers and orchestrators (e.g., lead architect) are also part of an IT Product team.

Conventional IT Solution Delivery Model

For this Strategy, we define the conventional IT Solution Delivery Model as the current status quo.

The conventional model follows the PMLC gated process and favours advanced planning, rigid plan execution based on committed scope, timeline, and costs, and will report on those commitments to determine the health of the IT project.

In the conventional model, IT Teams are organized by functions and expecting hand-offs of work throughout the development chain to perform their functional tasks. These work hand-offs are scoped by the team’s functions, not the project’s overall outcome.

In the conventional model, the Business Realization is expected to be realized as the IT Project closes and the business operations are changed with the new set of capabilities provided by the IT Project. The Business Realization plan expects the business owner to monitor and report discrepancies between planned and actual benefits. Discrepancies are handled by change requirements or new projects.

Target IT Solution Delivery Model

The Target IT Solution Delivery Model has the same objective as the conventional one: deliver business value for money and reducing the risk with the use of technology. Contrary to the conventional model, it will do so with:

- Limiting the size of IT Projects

- Defining IT Project in its entirety as the iteration step towards an organizational business goal

- Requiring commitments from business sponsors throughout the execution of the IT Project

- Favouring DevOps mentality to improve information flow and accelerate delivery (i.e., using delivery teams as opposed to a series of distributed functional teams)

- Measuring the business value obtained over time as opposed to strict schedule, cost, and scope estimates

Appendix E - Acronym List Definition

| Acronym | Definition |

|---|---|

| ARC | Architecture Review Committee |

| BPMO | Branch Project Management Office (IITB) |

| BRM | Business Relationship Management |

| CCoE | Cloud Community of Excellence |

| CDO | Chief Data Office |

| CFOB | Chief Financial Officer Branch |

| CIPSC | Cloud Implementation Project Steering Committee |

| DGPOC | Director General Project Oversight Committee |

| EA | Enterprise Architecture |

| EARB | Enterprise Architecture Review Board |

| EPMO | Enterprise Project Management Office |

| IAERMB | Internal Audit and Enterprise Risk Management Branch (formally Internal Audit Services Branch) |

| ICAAD | Integrated Corporate Accounting and Accountability Directorate |

| OCMC | Operations Change Management Committee |

| PMLC | Project Management Life Cycle as defined by CFOB |

| PMP | Project Management Plan |

| PPOC | Project Portfolio Operations Committee |

| PPPM | Policy on Project and Program Management |

| PPRC | Portfolio Review Committee |

| TSDM | Target Solution Delivery Model |

| TSWG | Technical Standards Working Group |

Appendix F - Statistics regarding Large IT-Enabled Projects

The following are statistics and references regarding the problems and success rates of large IT-enabled projects.

1) Standish Group study

The Standish Group, a research advisory organization that focuses on software development performance18, found that “of 3,555 projects from 2003 to 2012 that had labour costs of at least $10 million, only 6.4% were successful. The Standish data showed that 52% of the large projects were “challenged” meaning they were over budget, behind schedule or didn’t meet user expectations. The remaining 41.4% were failures — they were either abandoned or started anew from scratch.”19

The standish group study and results were also mentioned in Chapter 3 of the November 2006 Report of the Auditor General of Canada (statements 3.5 and 3.6). See point 3 below

2) 2016 and 2019 House of Commons Questions (projects of more than $1M)

Thanks to an Ottawa Civic Tech project, a dataset on large government IT projects20 was released with responses collected from two written questions in the House of Commons, from June 2016 and May 2019. Each question asked federal government departments to report ongoing or planned IT projects over $1M.

We find that:

- Of the 94 projects that contain sufficient data to compare schedules: 9% are on schedule, 4% are ahead of schedule, and 87% are behind schedule

- Of the 97 projects that contain sufficient data to compare budgets: 26% are within 10% original estimates, 28% are between 10% and 50% above their original estimates, 28% are above 50% of their original estimates, and 19% are below 10% of their original estimates.

3) Chapter 3 of the Novembre 2006 Report of the Auditor General of Canada21

The audit sampled seven projects and assessed them against four key criteria (governance, business case, organizational capacity, and project management).

In statements 3.5 and 3.6, the report highlights the Standish Group study (see 1) above) on the low success rate of large IT projects. It also refers a 2000 report in which it highlights a trend that is emerging in IT projects: “most new projects fit within its “Recipe for Success,” which limits the size of projects to six months and six people”.

The report concluded that, overall, the government had made little progress since the last audit (1997), had not adequately explained the results expected to be achieved as part of a business case, and not adequately assessed their capacity to take on high-risk IT projects. However, the report does conclude that 4/7 of the sampled projects were well managed.

4) 2010 Spring Report of the Auditor General of Canada22

The report examined whether five of the government entities with the largest IT expenditures have adequately identified and managed risks related to aging IT systems. All of them indicated that aging IT is a significant risk and the majority included it in their corporate risk profile (ESDC is one of them).

The report indicated that investment plans are not supported by a funding strategy where sufficient source of funds are provided to complete all initiatives necessary to manage aging IT. In 2008, HRSDC prepared a Long-Term Capital Plan consisting of 20 projects and initiatives costing $947.4 million over 5 years. The plan was not approved by senior management.

The report indicated that the Chief Information Officer Branch (CIOB) at TBS has been aware of the significant risks of aging IT for over a decade. CIOB responded to the report stating it agrees with recommendations but that the responsibility of funding initiatives relies under departmental deputy heads, not CIOB.

5) Chapter 2 - June 2011 Status Report of the Auditor General of Canada (Large IT Projects)23

This report examined the progress from its 2006 report that examined seven large IT Projects, and selected a new project approved by the Treasury Board.

It found that government made unsatisfactory progress on its commitments in response to the 2006 recommendations.

6) Report 5 – 2015 Spring Report of the Auditor General of Canada (IT investments by CBSA)24

This report presents the results of a performance audit, being an independent, objective, and systematic assessment of how well government is managing its activities, responsibilities, and resource.

Overall the report found that CBSA “had significant challenges in managing its information technology (IT) portfolio in a way that ensured it could deliver IT projects that meet requirements and deliver expected benefits.”

7) Report 1 – 2018 Spring Report of the Auditor General of Canada (Building and Implementing the Phoenix Pay System)25

The audit focused on whether Public Services and Procurement Canada (PSPC) effectively and efficiently managed and oversaw the implementation of the new Phoenix pay system.

The report concludes that “the Phoenix project was an incomprehensible failure of project management and oversight. Phoenix executives prioritized certain aspects, such as schedule and budget, over other critical ones, such as functionality and security. Phoenix executives did not understand the importance of warnings that the Miramichi Pay Centre, departments and agencies, and the new system were not ready. They did not provide complete and accurate information to deputy ministers and associate deputy ministers of departments and agencies, including the Deputy Minister of Public Services and Procurement, when briefing them on Phoenix readiness for implementation. In our opinion, the decision by Phoenix executives to implement Phoenix was unreasonable according to the information available at the time. As a result, Phoenix has not met user needs, has cost the federal government hundreds of millions of dollars, and has financially affected tens of thousands of its employees.”

8) 18F’s February 2020 presentation at Michigan Senate Appropriations Committee26

On February 2020, 18F (the U.S. equivalent of the Canadian Digital Service) did a presentation at Michigan’s Senate Appropriations Committee. 18F was created in 2014 by the Presidential Innovation Fellows (PIF, established in 2012 by the White House) to improve and modernize government technology27. The presentation focused on technology procurement and its challenges. In short, government departments are unable to adequately frame problems into manageable parts and, as such, are locking themselves into lengthy, large, and complicated contracts with vendors.

9) Delivering large-scale IT projects on time, on budget, and on value, McKinsey Digital, 201228

A 2012 research, by McKinsey Digital in collaboration with the university of Oxford, on large IT Projects (greater than $15 million) suggests that 45% of them run over budget, 7% over time, and delivers 56% less value than predicted. Software projects run the highest risk of cost and schedule overruns. The research also founds that the longer a project is scheduled to last, the more likely it is that it will run over time and over budget. The research recommends four ways to improve project performance, two of which are building effective teams and using short delivery cycles.

Inline references

-

Business Delivery Modernization’s (BDM) 2nd main objective: Policy agility ↩

-

2010 Sprint Report of the Auditor General of Canada ↩

-

See Appendix F on large IT-enabled project statistics ↩

-

How is the Public Service Managing Large IT Projects?, a synthesis of 6 Auditor General of Canada reports ↩

-

Mark Schwartz, War & Peace & IT ↩

-

Referencing ESDC’s Policy on Programme and Project Management’s 2 key objectives: #2 (focus on benefits), and #4 (intention to reduce risks) ↩

-

DORA State of DevOps 2019 pages 40, 51, and 53 ↩

-

See ESDC Chief Data Office Data Strategy on the use of Analytics to inform Service improvement Decisions ↩

-

Enabling conditions, not just heroics, Honey Dacanay, Nov 2020 ↩

-

Team Topology, 2019, by Matthew Skelton and Manuel Pais ↩

-

The four mandatory procedures are: Enterprise Architecture Assessment, APIs, Privacy and Monitoring of Networks, and IT Security Controls ↩

-

See TBS Service and Digital Target Enterprise Architecture, moving towards micro-services as a means to better manage technical debt ↩

-

This definition is reworded from The Open Group’s Product standard definition. ↩

-

About the Standish Group (standishgroup.com/about) ↩

-

Why we love modular contracting, by 18f ↩

-

18F’s February 2020 presentation at Michigan Senate Appropriations Committee ↩

-

See this article from McKinsey Digital, 2012 ↩