IITB User Experience Maturity Assessment

This report identifies areas of improvement of user experience (UX) processes and the role UX plays in Innovation, Information and Technology Branch (IITB)

Assessment and report completed by Elmina Iusifova & Leigh Gardner

Table of Content

Context

This report summarizes the results of a user experience (UX) maturity assessment and provides recommendations for the Innovation, Information and Technology Branch (IITB) to increase its level of maturity in the UX domain. The assessment used Nielsen Norman maturity model to cover common UX components including the strategy, process, culture and outcomes toward UX. The assessment measures IITB’s desire and ability to successfully deliver user-centric products and services to improve business operations and establishes a score for IITB’s overall level of maturity in the UX domain.

UX in IITB

Up until now, there was a limited amount of knowledge and information on UX within IITB. It was unclear what guidance and processes were followed for UX during the product development lifecycle (including digital products and applications), and if value was provided to the success of a project or return on investment. As a result, this may have excluded some of the main UX practices and process throughout the development lifecycle.

What is UX?

User experience is a process that teams use to create products that provide meaningful and relevant experience to users. UX is not just about products, websites and applications. It refers to any interaction user has with services, processes, organization messaging, etc. UX is about formulating solutions based on how people (users) think and act. It is a strategic process that aims to make something easier, more understandable and intuitive, in order to meet users’ needs and expectations. Focusing on user-needs at all stages of development is essential to ensure that products and services enable users to accomplish their tasks quickly and effectively.

Government of Canada policy

As per its Digital Standards and Policy on Service and Digital, the Government of Canada (GC) is committed to the development of user-centric products and services. As a result, teams are required to put an emphasis on UX and user engagement throughout the development lifecycle. In recent years, the surge of online platforms, applications, programs and services have become essential for businesses and governments. As such, it is critical for departments to develop programs and services that provide Canadians with an intuitive digital experience. To do this, the GC has highlighted the importance of UX within its policies and plans, so that departments deliver products and solutions that meet users’ needs and provide services that are alike that of commercial service offerings (e.g., social media, online shopping, online banking etc.). By doing so, each department realizes the benefits of advancing the breadth of their services by understanding users’ needs and serving more Canadians across the country.

The following Standards, Policies, Plans, and National Commitments set the overarching vision for UX across the GC:

- The Digital Nations Charter 2020 commits Canada to working toward core principles of digital development with a focus on user needs.

- The Government of Canada Digital Standards that concentrates in particular, designing with users, iterate and improve frequently, and build in accessibility from the start.

- Policy and Service and Digital articulates how GC organizations manage service delivery in the digital era by involving users directly and continuously improve their products and services throughout their lifecycle.

- Canada’s Digital Ambition 2022 promotes priorities and activities which will help departments move toward the digital delivery of programs and services that users need.

These GC policies centered around “user needs” and “design with users” provide IITB an opportunity to improve its user engagement abilities.

Goals and objectives

The goal of this assessment is to get a better understanding of IITB’s UX practices and processes, and determine IITB’s level of UX maturity. To measure this, the IT Strategy team developed an assessment with two objectives. The first objective was to measure IITB’s level of UX maturity to determine quality and consistency in research and design processes, resources, tools and operations; to understand how widely UX is used in product development and service delivery. The assessment identified areas for improvement to strengthen UX processes and develop a capacity to successfully deliver user-centric products/services. The second objective was to provide guidance and recommendations on how to improve the level of UX maturity in IITB and align with GC policies.

Participants

This research focused on teams throughout the IITB who have a product, service or process that requires UX assistance. The survey was conducted by the manager of the team from each directorate in IITB. A total of 122 participants from 9 different divisions in IITB took the survey.

Consultations

Before conducting the assessment, IT Strategy consulted with the groups below to generate ideas on preparing a survey to measure IITB’s level of UX maturity.

- Youth Digital Gateway, Digital Experience and Client Data team, and the Accelerator Hub team from Transformational Management Branch (TMB);

- CX Centre of Expertise, under the Strategic Directions Directorate in Citizen Service Branch (CSB), Service Canada; and

- Transformation Management Directorate under the Transformation Management Branch (now with the Strategic and Service Policy Branch)

Upon collection of survey results, these groups were consulted again to come up with solutions and recommendations for IITB to advance its level of UX maturity.

Survey creation and data storage

To create the survey IT Strategy collaborated with the Interactive Fact-finding Service (IFFS) team using an internally developed IFFS tool within IITB. All results have been stored on the IT Strategy’s SharePoint site.

Metrics and methodology

The survey was developed based on Nielsen Norman maturity model that incorporates new norms and adapts to the evaluation of current industry. The survey questions covered different aspects of UX which were based on 4 main factors (1 to 4) and 12 subfactors (a to l).

-

Strategy

a. vision;

b. planning and prioritization;

c. budget;

-

Culture

d. awareness;

e. appreciation and support;

f. competency;

g. adaptability;

-

Process

h. methods;

i. collaboration;

j. consistency;

-

Outcomes

k. impact of the design;

l. measurement.

Research

The IT Strategy team conducted an online survey containing 13 questions to be answered within a two-week period between October 24 and November 10, 2022. The survey took about 15 minutes to complete and focused on four UX factors: 1. Strategy; 2. Culture; 3. Process 4. Outcomes, which are outlined in The UX Maturity model of Nielsen Norman Group. The survey also contained a section that asked participants to provide feedback on how UX is conducted within their team and division.

Once completed, the survey populated a score on a scale from 1-5 for each question and subfactor. To determine which of the 6 stages of UX Maturity model of Nielsen Norman Group IITB currently occupies the scores with decimals were rounded to the nearest number, which means that scores with digit more than 0.5 were rounded up; whereas scores with digit of 0.5 or less were rounded down. From there, the scores for each subfactor were calculated for each IITB division and then used to populate an overall score for the branch. In order to convert the scale from 1-5 to 1-6, the survey results were multiplied by 1.2.

Levels and scoring

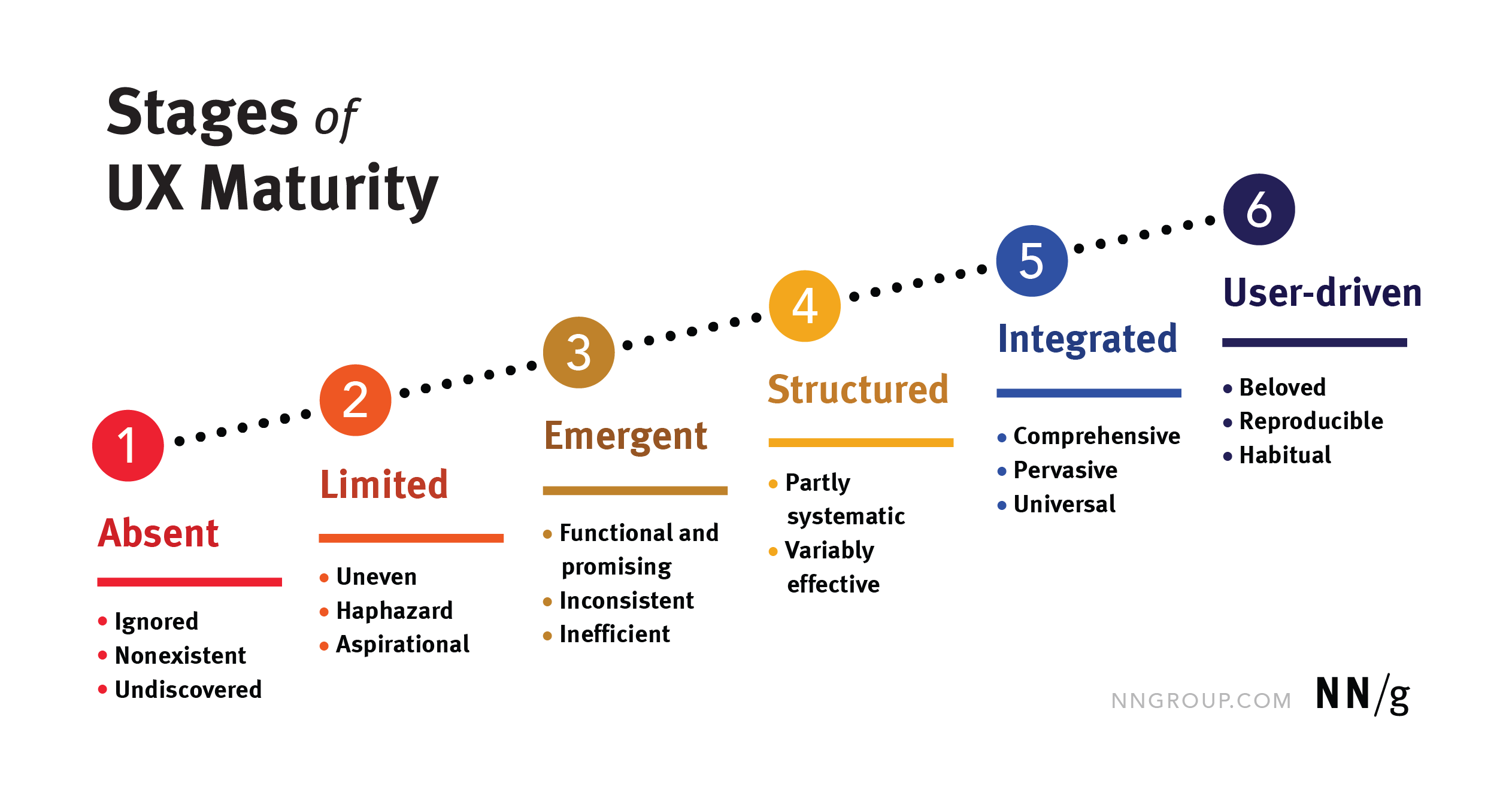

The maturity level of IITB was determined based on 6 levels:

Level 1. Absent - UX is ignored or non-existent.

Level 2. Limited - UX work is rare, done haphazardly, and lacking importance.

Level 3. Emergent - UX work is functional and promising but done inconsistently and inefficiently.

Level 4. Structured - The organization has semi systematic UX-related methodology that is widespread, but with varying degrees of effectiveness and efficiency.

Level 5. Integrated - UX work is comprehensive, effective, and pervasive.

Level 6. User-driven - Dedication to UX at all levels leads to deep insights and exceptional user-centered–design outcomes.

The factors and their subfactors produce a level of UX maturity from absent to user-driven (see Figure 1).

This figure describes each stage of UX maturity from 1. Absent to 6. User-driven.

Analysis

The following section describes each factor, subfactor and overall score for IITB.

Strategy factor

This section outlines the branch level for UX vision, planning, and prioritization and budget.

- Subfactor a. Vision considers whether things like yearly objectives, cross team alignment, and leadership are UX-oriented.

IITB’s score for vision is 3.7 (~4)/6, structured. This means the organization has strong user-centered ideas included in vision, but that vision is not fully understood or used as a motivator across the organization.

- Subfactor b. Planning and prioritization accounts for whether aspects like development schedules and processes, operationalized systems, and standardized decision-making take UX into account.

IITB’s score for planning and prioritization is 3.4 (~3)/6, emergent. This means research occurs in response to requests and is not strategic or related to a high-level strategy. Quick wins are prioritized over long-term investments.

- Subfactor c. Budget allocates adequate resources, people and time, to UX.

IITB’s score for budget is 2.8 (~3)/6, emergent. This means when it comes to getting the work done, UX budget and team members are spread thinly among multiple teams. The strategy factor focuses on the strategy that includes high-level decisions and planning that contributes to the success of UX work before it begins and the availability of UX resources.

Overall, for the main factor of strategy, the branch level is 3.3 (~3)/6, which is emergent. Most teams scored around level 3/6 for the Strategy factor, which is emergent level.

Culture factor

This section summarizes IITB’s UX awareness, appreciation and support, competency, and adaptability.

- Subfactor a. Awareness reviews how widespread knowledge of UX and its benefits is across the organization.

IITB’s score for awareness is 4.0 (~4)/6, structured. This means there is an organization-wide understanding of UX. UX is relatively respected by other teams and peers.

- Subfactor b. Appreciation and support shows whether people outside the UX team support and are involved with UX work.

IITB’s score for appreciation and support is 3.5 (~3)/6, emergent. This means there might be some support for UX from some leaders, but most are still looking for proof that UX is worth the investment and are unable to articulate its value. Especially for the less tangible aspects of UX (discovery research, qualitative testing, etc.), there is widespread lack of respect.

- Subfactor c. Competency assesses whether the organization has dedicated UX-roles, a breadth of skills in UX teams, and pertinent hiring practices.

IITB’s score for competency is 3.0 (~3)/6, emergent. This means the limited UX staff are isolated, and their skill sets are often incomplete. The organization lacks understanding of the multifaceted benefits of a user-centered design process.

- Subfactor d. Adaptability addresses whether the organization is willing to advance best practices and adjust approaches to improve, and logistically able to adapt to changing needs.

IITB’s score for adaptability is 4.3 (~4)/6, structured. This means that some (but not all) teams at the organization desire and plan to repeat, grow, and improve UX work.

The culture factor focuses on UX literacy and UX culture. It circulates ideas that contribute to the organization’s understanding of the purpose and value of UX. Overall, for the main factor of culture, the branch level is 3.7 (~4)/6, which is structured. Most teams scored around level 4/6 for the culture factor, which is structured level.

Process factor

This section measures frequency of use of IITB’s UX methods, collaboration and consistency.

- Subfactor a. Methods assess whether there is established use of user-centric techniques throughout the entire product lifecycle: design practices, qualitative and quantitative research approaches, and iteration.

IITB’s score for methods is 3.5 (~3)/6, emergent. This means when research methods are applied in the organization, they are often misused or used too late in the development process to be impactful.

- Subfactor b. Collaboration determines whether cross functional team collaboration exists and brings new diverse ideas.

IITB’s score for collaboration is 4.1 (~4)/6, structured. This means teams collaborate in design workshops and by participating in research. Most teams communicate frequently in Agile ceremonies, such as stand-ups and retrospectives.

- Subfactor c. Consistency addresses the existence and usage of shared systems, frameworks, and tools that allow consistent inclusion of a UX mindset in various processes.

IITB’s score for consistency is 3.0 (~3)/6, emergent. This means that the design process and outputs are inconsistent, due to isolated UX staff serving conflicting needs and priorities across the organization. UX is part of the development process, but only at specific times, not from beginning to end. UX processes are still occasionally seen as a detriment to project schedules and team creativity.

The process factor includes all UX work that occurs (research, design, content creation, etc.) within an organization. Overall, for the main factor of process the branch level is at 3.5 (~3)/6, which is emergent. Most teams scored around level 3/6 for the process factor, which is emergent level.

Outcomes factor

This section assesses the impact of design and existing user-centric metrics.

- Subfactor a. Impact of the Design looks at the quality and effectiveness of the implemented design from a user-centered perspective.

IITB’s score for impacts of the design is 4.3 (~4)/6, structured. This means that teams understand the idea of quality in design and research and there might be a process in place for tracking it. However, some teams may not employ these user-centered goals regularly to drive projects.

- Subfactor b. Measurement assesses whether an organization has clear user-centered metrics and a process in place for tracking them.

IITB’s score for measurement is 3.2 (~3)/6, emergent. This means that not all teams use UX-related metrics, and, if they do, the UX metrics vary from team to team. Most of the time, UX metrics do not align with an organization-wide strategy and the results are misused.

The outcomes factor highlights the result of UX research and design if there is any in the organization. Overall, for the outcomes factor, the branch level is 3.75 (~4)/6, which is structured. Most teams scored around level 4/6 for the outcomes factor, which is structured level.

Level of UX Maturity in IITB

According to Nielsen Norman group, when UX maturity is level 3, emergent, the organization sees more UX work happening across teams, but efforts are generally low-budget, unstable, and do not align to any organization-wide strategy. A limited number of UX-specific roles exists, and they are left to function without centralized UX resources and frameworks. Understanding of UX value varies across teams and user-centered methods are applied inconsistently. Although some teams prioritizing UX may see the benefits and results of their efforts, most leaders still need convincing. For the most part, UX is still seen as “nice to have” and it is often the first to go when trade-offs must be made. Based on results received, each division’s level of UX maturity scores an average of level 3.5 (~3)/6. IITB’s level of maturity is based on 4 main factors in average scores at level 3.5 (~3)/6 as well.

Advantages and disadvantages of being at level 3, emergent

According to Nielsen Norman group:

The advantage of being at stage 3 is that user-centric methods and approaches do exist, but it lacks cohesion and integration. UX work might be involved in some projects and processes, but it is not consistently well-executed and incorporated into strategy and planning. Some individual teams might realize the value of UX, and some leaders may even support and advocate for UX but since investment is low there are skill gaps that need to be addressed.

The disadvantage and risk of being at stage 3 is complacency. The organization might feel satisfied with this level thinking “we do enough UX now” and get stuck at stage 3. UX must be functional to have true impact, but if an organization is still seeking proof of UX value, it will be difficult to convince leadership that not only does UX work need to be done, but additional resources must be invested for more tangible actions.

Recommendations

How to level up

From the Nielsen Norman Maturity model to level up from level 3, emergent1, to level 4, structured 2, the organization needs to focus on the:

-

process factor of UX maturity by applying and supporting UX work that occurs (research, design, content creation, etc.) within an organization.

-

continue to prioritize culture by building an awareness of UX and improving understanding of UX value and inclusion of UX in strategic conversations and initiatives.

-

strategy factor by including UX disciplines in high-level decisions and planning that contributes to the success of the project planning before it begins.

Here are some activities that the organization needs to consider concentrating:

- Provide professional development programs to upskill existing UX staff

- Create UX training and education for cross-functional roles (especially teams without regular UX support)

- Establish centralized UX resources such as design systems and research repositories

- Create unifying design principles that connect UX work across teams

- Document and share standardized design process and support methods across teams

- Enhance collaboration and communication opportunities among UX staff members by instituting a regular UX-meeting cadence

- Standardize design-quality metrics and benchmarking UX in order to track and communicate and improve over time.

Here are some proposed recommendations for IITB to consider that can help to leverage mentioned above activities:

| Recommendations | Activity | Approx. timeline |

| 1. Build User Experience (UX) working group | 1.1. Measure. Start with a kick-off workshop to measure the IITB team's attitude towards UX concepts and approaches. This way, an indication of the employee's familiarity and openness to UX processes can be established, which can help tailor the UX approach. | Summer 2023 |

| 1.2. Investigate. Investigate and provide a reusable set of UX disciplines/practices that teams can leverage. | Summer 2023 | |

| 1.3. Collaborate. Enhance collaboration and communication opportunities among employees who have UX work experience across teams and divisions, encourage sharing knowledge and experience by instituting a regular UX-meeting cadence. | Summer 2023 | |

| 1.4. Educate. Create UX training and education for cross functional roles (especially teams without regular UX support). Provide professional development programs to upskill existing staff in teams where UX is essential. Importance of education to create common ground and foster awareness of correct ways to implement UX design. An employee who is very new to UX could initially be educated in UX concepts and techniques.Simultaneously, there are other possibilities which come along the way, such as the accessibility and consistency of features. Educate leaders. Create a widely supported user-centered mindset in the organization by educating leaders in design thinking, who then will further educate their staff and thus implement design thinking in multiple projects and initiatives. | Fall 2023 | |

| 2. Create UX Centre of Excellence | 2.1.Create. Create UX Centre of Excellence to ensure effective execution of projects and elements involving UX for IITB teams and assess whether sufficient UX was done during project management lifecycle. UX Center of Excellence will make proposals to IT teams with an outline or plan that relies on in-depth research and investigation. It will be up to UX Governance to articulate a problem-solution relationship in a proposal and offer the route to overcoming the problem in the best way possible. A change can be anything from tweaking the position of user interface elements or user research on product or service delivery. | 2024 |

| 2.2. Make UX process transparent. Make UX process always transparent by visualizing the design process steps and project roles in a visual roadmap, or by mapping all design components in a design system. A design system combines all design components, such as style guides and rules, and thus serves as a guide on how an organization conducts its design processes. | 2024 | |

| 2.3. Document and share. Document and share standardized design process and supporting methods across teams if they exist. Create unifying design principles that connect UX work across teams. Standardizing design-quality metrics and benchmarking UX in order to track and communicate and improvements over time. To help teams become more ‘UX mature’, it is necessary to guide them along the way. The findings of the assessment indicate that concepts such as design sprints and minimum viable product (MVPs) are often very new and vague for low UX Maturity teams. Therefore, it is relatively easy for teams to form their own interpretations of UX concepts and communicate them at cross purposes. Creating common ground and a shared understanding of each other's expectations and roles is crucial to create a broad UX support base. | 2024 | |

| 2.4. Be present and approachable in the organization. Being approachable UX enables employees to experience a low threshold to collaborate, learn more about UX, or ask for a UX practitioner's perspective on their work. To make UX design widely accessible, UX practitioners could be present in relevant meetings and give themes for design sprints or feedback sessions. | 2024 | |

| 2.5. Hire more UX expertise. Hire more UX experts/consultants to specific UX roles to design and develop exceptional user experience that engage and enable the people we serve where needed. | 2024 |